Async HTTP benchmarks on PyPy3

Hello everyone,

Since Mozilla announced funding, we've been working quite hard on delivering you a working Python 3.5.

We are almost ready to release an alpha version of PyPy 3.5. Our goal is to release it shortly after the sprint. Many modules have already been ported and it can probably run many Python 3 programs already. We are happy to receive any feedback after the next release.

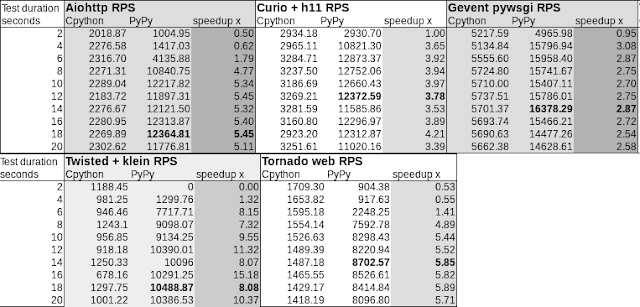

To show that the heart (asyncio) of Python 3 is already working we have prepared some benchmarks. They are done by Paweł Piotr Przeradowski @squeaky_pl for a HTTP workload on serveral asynchronous IO libraries, namely the relatively new asyncio and curio libraries and the battle-tested tornado, gevent and Twisted libraries. To see the benchmarks check out https://github.com/squeaky-pl/zenchmarks and the instructions for reproducing can be found inside README.md in the repository. Raw results can be obtained from https://github.com/squeaky-pl/zenchmarks/blob/master/results.csv.

The

purpose of the presented benchmarks is showing that the upcoming PyPy release

is already working with unmodified code that runs on CPython 3.5. PyPy

also manages to make them run significantly faster.

The

benchmarks consist of HTTP servers implemented on the top of the mentioned

libraries. All the servers are single-threaded relying on underlying

event loops to provide concurrency. Access logging was disabled to

exclude terminal I/O from the results. The view code consists of a

lookup in a dictionary mapping ASCII letters to verses from the famous

Zen of Python. If a verse is found the view returns it, otherwise a 404

Not Found response is served. The 400 Bad Request and 500 Internal

Server Error cases are also handled.

The workload was generated with the wrk HTTP benchmarking tool. It is run with one thread opening up to 100

concurrent connections for 2 seconds and repeated 1010 times to get

consecutive measures. There is a Lua script provided

that instructs wrk to continuously send 24 different requests that hit

different execution paths (200, 404, 400) in the view code. Also it is

worth noting that wrk will only count 200 responses as successful so the actual request per second throughput is higher.

For your convenience all the used libraries versions are vendored into the benchmark repository. There is also a precompiled portable version of wrk provided

that should run on any reasonably recent (10 year old or newer) Linux

x86_64 distribution. The benchmark was performed on a public cloud scaleway x86_64 server launched in a Paris data center. The server was running

Ubuntu 16.04.01 LTS and reported Intel(R) Xeon(R) CPU D-1531 @ 2.20GHz

CPU. CPython 3.5.2 (shipped by default in Ubuntu) was benchmarked

against a pypy-c-jit-90326-88ef793308eb-linux64 snapshot of the 3.5 compatibility branch of PyPy.

We want to thank Mozilla for supporting our work!

Cheers,

fijal, squeaky_pl and the PyPy Team

The PyPy blogposts

Create a guest post via a PR to the source repo

Recent Posts

- Error Message Style Guides of Various Languages

- PyPy v7.3.7: bugfix release of python 3.7 and 3.8

- PyPy v7.3.6: release of python 2.7, 3.7, and 3.8

- Better JIT Support for Auto-Generated Python Code

- #pypy IRC moves to Libera.Chat

- PyPy v7.3.5: bugfix release of python 2.7 and 3.7

- Some Ways that PyPy uses Graphviz

- PyPy v7.3.4: release of python 2.7 and 3.7

- New HPy blog

- PyPy's blog has moved

Archives

Tags

- arm (2)

- cli (1)

- compiler (1)

- cpyext (3)

- CPython (3)

- ep2008 (1)

- extension modules (2)

- gc (1)

- GraalPython (1)

- hpy (1)

- Heptapod (1)

- jit (18)

- jython (1)

- kcachegrind (1)

- numpy (24)

- parser (1)

- profiling (1)

- pypy (6)

- pypy3 (16)

- PyQt4 (1)

- release (52)

- releasecffi (3)

- releaserevdb (1)

- releasestm (1)

- revdb (1)

- roadmap (1)

- RPyC (1)

- speed (3)

- sponsors (7)

- sprint (3)

- stm (14)

- sun (1)

- Smalltalk (1)

- Squeak (1)

- unicode (1)

- valgrind (1)

Comments

This is fantastic! How close to ready is the async/await syntax? Any chance it could be snuck in the 3.5 release?

As far as I know, curio (and maybe asyncio) wouldn't run if we didn't properly support async/await already.

Great news, you are doing awesome work! Any chance cpyext will be included in the alpha?

cpyext will be included. We expect C-API support to be approximately on par with pypy2, e.g. the pypy3 nightlies have nearly complete support for numpy.

Awesome work!

@Benjamin, async def / async for / async with / await were all introduced in Python 3.5.

This is wonderful work, congrats!

This is great. It would be good to include some alternate asyncio back-ends as well if they work with pypy.

For instance, my current project uses libuv and gbulb in different components.

Will this work with uvloop? I'm curious as I would like to get Sanic running on this! :-)

From what I've read on #pypy (sorry if I'm messing something up): uvloop is a drop-in replacement of asyncio, but asyncio is faster on PyPy. PyPy's JIT for pure Python code beats the overheads of the CPython API compatibility layer (in this case, via Cython). Moreover, considering the whole application, using asyncio on PyPy easily beats using uvloop on CPython. So, as long as it remains a fully compatible replacement, you can "drop it out" and use asyncio instead on PyPy.